In this blog post, we are going to illustrate how to configure and extract HTML content using JSOUP in ColdFusion. JSOUP is a Java based library to work with HTML based content. It provides a very convenient API to extract and manipulate HTML content, using the best of DOM, CSS, and jquery-like selector methods.

if you want to access data from third party applications, reliable way is API access. But if original application provider don't provide any API / SOAP access to us, then we don't have any other option except Web scraping aka HTML Parsing. ColdFusion provided handy cfhttp tag, that will be enough to fetch web site content. Consider there is a list of content in a page which having user details in table format along with web page's header & footer content. scraping only all user information from the whole web page HTML content using string manipulation functions / regular expressions is tedious & time consuming task. There is a neat & easy solution for scraping the data available, that is JSOUP (Java based library - JAR ). Using this JSOUP jar, we can easily traverse, fetch & manipulate particular HTML data from the whole web page content as per our needs.

We are currently using ColdFusion 2016 which is having Java version 1.8.0_72

Latest JSOUP jar required java 1.5 or above. So check your JAVA version in ColdFusion admin -> "Settings Summary" tab and confirm whether the version is above 1.5 or else you need to update your JAVA version.

Download the latest version of JSOUP jar file from repo, MVN-Repository

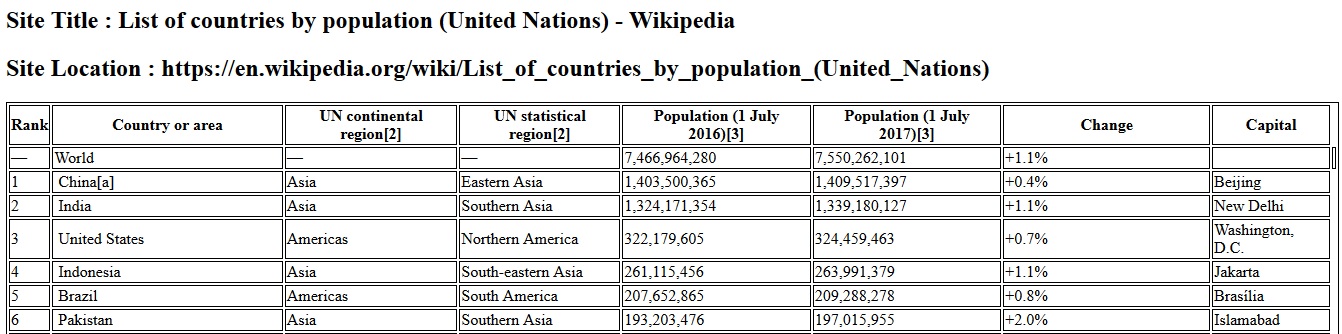

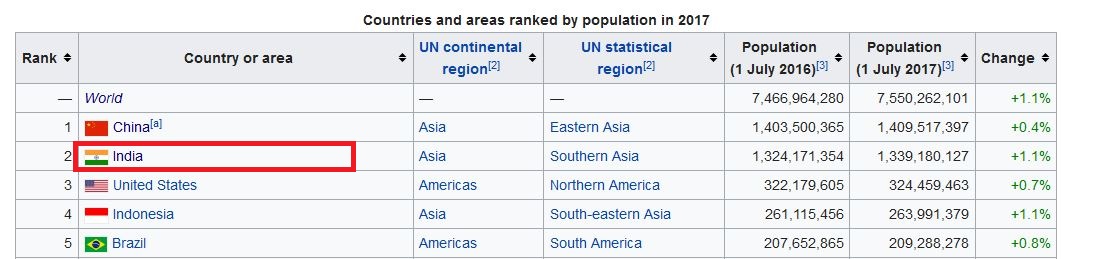

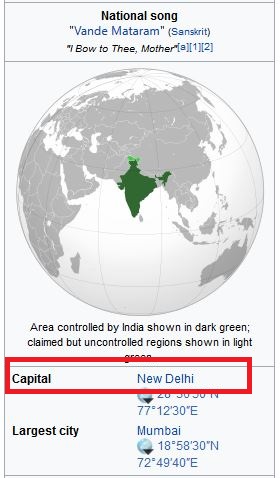

Using JSOUP, we can able to parse HTML content from any web site as per our needs. Here we're going to show a simple demo of parsing top 5 populated countries & that particular country's capital city information from wikipedia web site. List of countries by population page have all Countries and areas ranked by population in a table format, but this page doesn't have the capital city information. So while parsing, we should get the particular country link. Then we have to fetch that country page HTML content & scrape the capital of that country from that child page. This is commonly called as crawling or spidering the web site pages from one page to another.

Above is the partial screen shot of parent page which have countries' population information. Capital city information will be available in individual country wiki page, that link available in "Country or area" column in this table. For example, for country India, capital details will be there in Indialink. Like this way, we can able to parse N number of nested pages also and then scrape the needed content from those child pages.

My simple application files structure look like this,

jSoup provides sufficient enough selectors to find or manipulate elements using a CSS or jQuery-like selector syntax. As well as, it provides DOM methods to navigate a document to extract and manipulate that document data. In our example, we used various jSoup DOM methods like text(), nextElementSibling(), attr()..etc to extract data from the HTML. As well as, used different selectors like th:contains(), table.geography - Class selectors to find particular html element from the document.

While running the application, we get result like this, which display the country details as per the link List of countries by population including the Capital city(scrape inside of each country's link) as additional column.